I know next to nothing about MARC,though being a shambrarian I have to fight it sometimes. My knowledge is somewhat binary, absolutely nothing for most fields/subfields/tags but ‘fairly ok’ for the bits I’ve had to wrestle with.

[If you don’t know that MARC21 is an ageing bibliographic metadata standard, move on. This is not the blog post you’re looking for]

Recent encounters with MARC

- Importing MARC files in to our Library System (

TalisCapita Alto), mainly for our e-journals (so users can search our catalogue and find a link to a journal if we subscribe to it online). Many of the MARC records were of poor quality and often did not even state the item was (a) a journal (b) online. Additionally Alto will only import if there is a 001 field, even though the first thing it does is move the 001 field to the 035 field and create its own. To handle these I used a very simple script to run through the MARC file – using MARC::Record – to add an 001/006/007 where required. - Setting up sabre – a web catalogue which searches the records of both the University of Sussex and the University of Brighton – we need to pre-process the MARC records to add extra fields, in particular a field to tell the software (vufind) which organisation the record was from.

Record problems

PC Tools

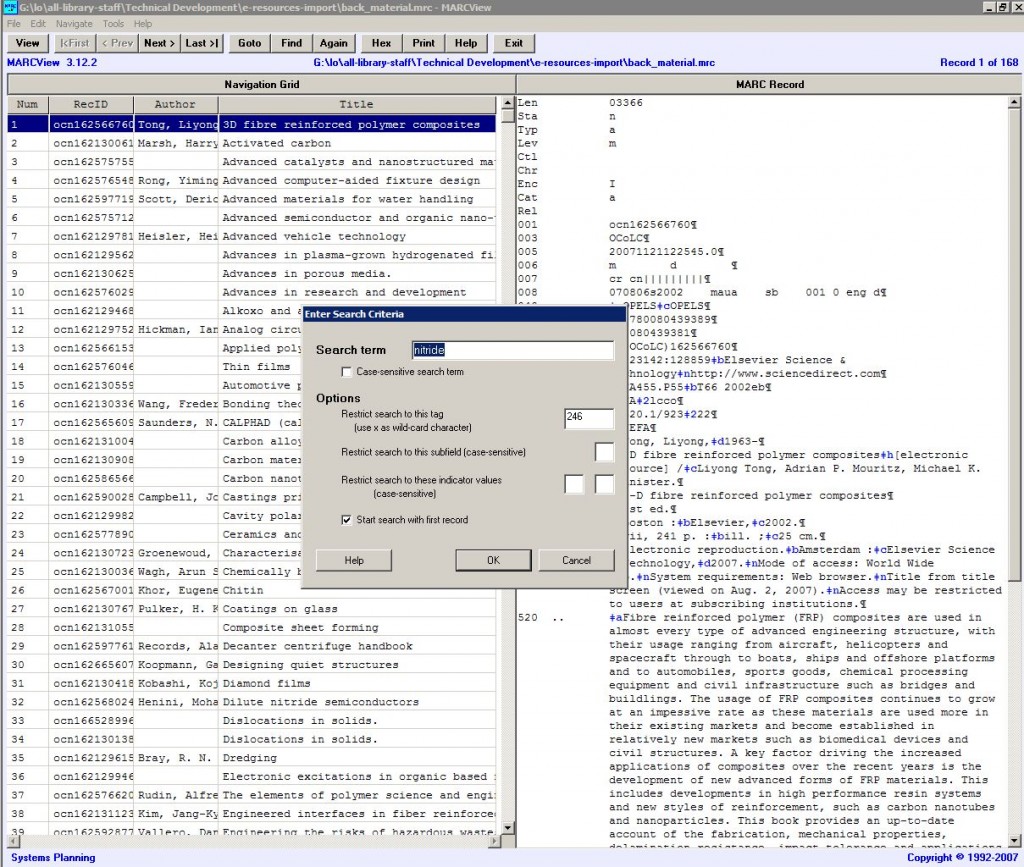

USEMARCON is the final utility. It comes with a GUI interface, both of which can be downloaded from The National Library of Finland. The British Library also have some information on it. Its main use is to convert MARC files from one type of MARC to another, something I haven’t looked in to, but the GUI provides a way to delve in to a set of MARC records.

Back to the problem…

So we were pre-processing MARC records from two Universities before importing them in to vufind using a Perl script which had been supplied by another University.

It turns out the script was crashing on certain records, all records after the problematic record were not being processed. It wasn’t just that script, any perl script using MARC::Record (and MARC::batch) would crash when it hit a certain point.

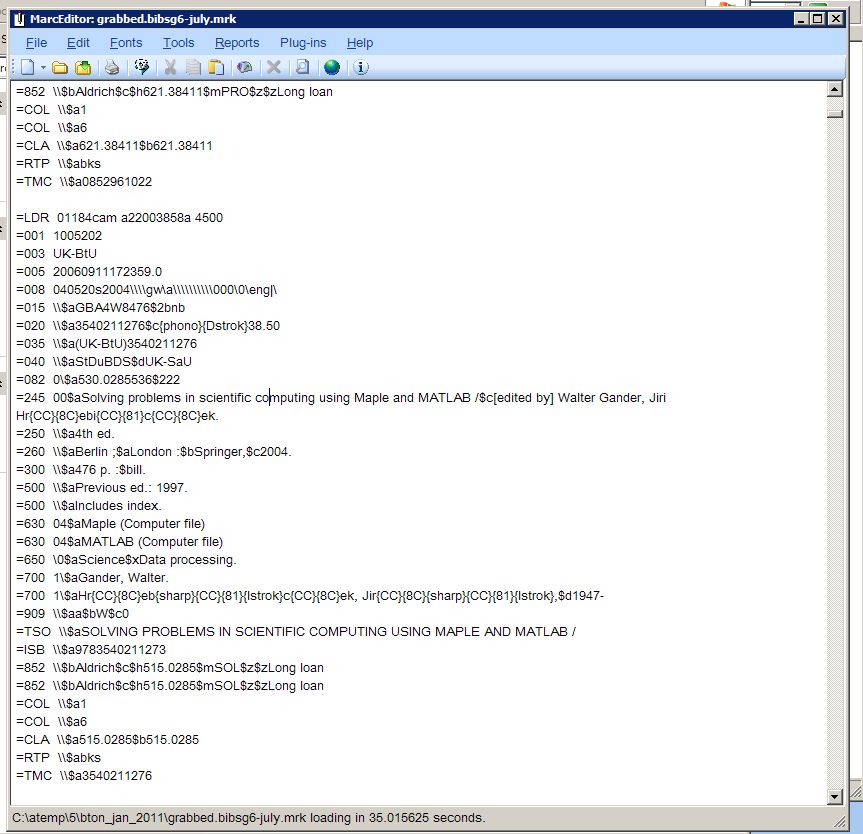

By writing a simple script that just printed out each record we could as least see what the record was immediately before the record causing it to crash (i.e. the last in the list of output). This is where the PC applications were useful. Once we know the record before the problematic record, we could find it using the PC viewers and then move to the next record.

The issue was certain characters (here in the 245 and 700 fields). I haven’t got to the bottom of what the exact issue is. There are two kinds of popular encodings: MARC-8 and records in UTF-8, and this can be designated in the Leader (9th character). I think Alto (via it’s marcgrabber tool) exports in MARC-8 but perhaps the characters in the record did not match the specified encoding.

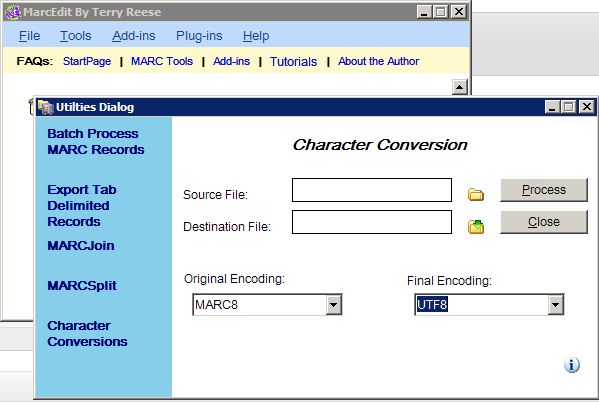

The title (245) on the orignal catalogue looks like this:

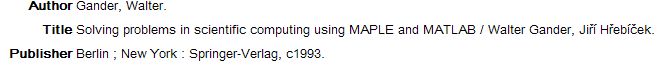

One work around was to use a slightly hidden feature of MarcEdit to convert the file to UTF:

I was then able to run the records through the perl script, and import it in to vufind.

But clearly this was not a sustainable solution. Copying files to my PC and running MarcEdit was not something that would be easy to automate.

Back to MARC::Record

The error message produced looked something like this:

utf8 "xC4" does not map to Unicode at /usr/lib/perl/5.10/Encode.pm line 174

I didn’t find much help via Google, though did find a few mentions of this error related to working with MARC Records.

The issue was that the script loops through each record, the moment it tries to start a loop with a record it does not like it crashes, so there is no way to check for certain characters in the record as it will already be too late.

Unless we use something like exceptions. The closest to this perl has out-of-the-box is eval.

By putting the whole loop in to an eval, if it hits a problem the eval simply passed the flow down to the or do part of the code. But we want to continue processing the records, so this simply calls the eval again, until it reaches the end of the record. You can see a basic working example of this here.

So if you’ve having problems processing a file of MARC records using perl MARC::Record / MARC::batch try wrapping it in a eval. You’ll still loose the records it can not process but it wont stop in it’s tracks (and you can output an error log to record the record number of the records with errors).

Post-script

So, after pulling my hair out, I finally found a way to process a filewhich contains records which cause MARC::Record to crash. It had caused me much stress as I needed to get this working, and quickly, in an automated manner. As I said, the script had been passed to us by another University and it already did quite a few things so I was a little unwilling to rewrite using another language (though a good candidate would be php as the vufind script was written in that language and didn’t seem to have these problems).

But in writing this blog post, I was searching using Google to re-find the various sites and pages I had found when I encountered the problem. And in doing so I had found this: http://keeneworks.posterous.com/marcrecord-and-utf

Yes. I had actually already resolved the issue, and blogged about it, back in early May. I had somehow – worryingly – completely forgotten any of this. Unbelievable! You can find a copy of a script based on that solution (which is a little similar to the one above) here.

So there you are, a few PC applications and a couple of solutions to perl/MARC issue.